Basics of parameter estimation

In this example, we’ll go into some of the basics of parameter estimation and

how they are implemented in bilby.

Firstly, consider a situation where you have discrete data \(\{y_0, y_1,\ldots, y_n\}\) taken at a set of times \(\{t_0, t_1, \ldots, t_n\}\). Further, we know that this data is generated by a process which can be modelled by a linear function of the form \(y(t) = m t + c\). We will refer to this model as \(H\). Given a set of data, how can we figure out the coefficients \(m\) and \(c\)?

This is a well studied problem known as linear regression and there already exists many ways to estimate the coefficients (you may already be familiar with a least squares estimator for example).

Here, we will describe a Bayesian approach using nested sampling which might feel

like overkill for this problem. However, it is a useful way to introduce some

of the basic features of bilby before seeing them in more complicated

settings.

The maths

Given the data, the posterior distribution for the model parameters is given by

where the first term on the right-hand-side is the likelihood while the second is the prior. In the model \(H\), the likelihood of the data point \(y_i, t_i\), given a value for the coefficients we will define to be Gaussian distributed as such:

Next, we assume that all data points are independent. As such,

When solving problems on a computer, it is often convenient to work with

the log-likelihood. Indeed, a bilby likelihood must have a log_likelihood()

method. For the normal distribution, the log-likelihood for n data points is

Finally, we need to specify a prior. In this case we will use uncorrelated uniform priors

The choice of prior in general should be guided by physical knowledge about the system and not the data in question.

The key point to take away here is that the likelihood and prior are

the inputs to figuring out the posterior. There are many ways to go about

this, we will now show how to do so in bilby. In this case, we explicitly

show how to write the GaussianLikelihood so that one can see how the

maths above gets implemented. For the prior, this is done implicitly by the

naming of the priors.

The code

In the following example, also available under examples/core_examples/linear_regression.py we will step through the process of generating some simulated data, writing a likelihood and prior, and running nested sampling using bilby.

1#!/usr/bin/env python

2"""

3An example of how to use bilby to perform parameter estimation for

4non-gravitational wave data. In this case, fitting a linear function to

5data with background Gaussian noise

6

7"""

8import bilby

9import matplotlib.pyplot as plt

10import numpy as np

11from bilby.core.utils import random

12

13# Sets seed of bilby's generator "rng" to "123" to ensure reproducibility

14random.seed(123)

15

16# A few simple setup steps

17label = "linear_regression"

18outdir = "outdir"

19bilby.utils.check_directory_exists_and_if_not_mkdir(outdir)

20

21

22# First, we define our "signal model", in this case a simple linear function

23def model(time, m, c):

24 return time * m + c

25

26

27# Now we define the injection parameters which we make simulated data with

28injection_parameters = dict(m=0.5, c=0.2)

29

30# For this example, we'll use standard Gaussian noise

31

32# These lines of code generate the fake data. Note the ** just unpacks the

33# contents of the injection_parameters when calling the model function.

34sampling_frequency = 10

35time_duration = 10

36time = np.arange(0, time_duration, 1 / sampling_frequency)

37N = len(time)

38sigma = random.rng.normal(1, 0.01, N)

39data = model(time, **injection_parameters) + random.rng.normal(0, sigma, N)

40

41# We quickly plot the data to check it looks sensible

42fig, ax = plt.subplots()

43ax.plot(time, data, "o", label="data")

44ax.plot(time, model(time, **injection_parameters), "--r", label="signal")

45ax.set_xlabel("time")

46ax.set_ylabel("y")

47ax.legend()

48fig.savefig("{}/{}_data.png".format(outdir, label))

49

50# Now lets instantiate a version of our GaussianLikelihood, giving it

51# the time, data and signal model

52likelihood = bilby.likelihood.GaussianLikelihood(time, data, model, sigma)

53

54# From hereon, the syntax is exactly equivalent to other bilby examples

55# We make a prior

56priors = dict()

57priors["m"] = bilby.core.prior.Uniform(0, 5, "m")

58priors["c"] = bilby.core.prior.Uniform(-2, 2, "c")

59

60# And run sampler

61result = bilby.run_sampler(

62 likelihood=likelihood,

63 priors=priors,

64 sampler="dynesty",

65 nlive=250,

66 injection_parameters=injection_parameters,

67 outdir=outdir,

68 label=label,

69 rstate=random.rng,

70)

71

72# Finally plot a corner plot: all outputs are stored in outdir

73result.plot_corner()

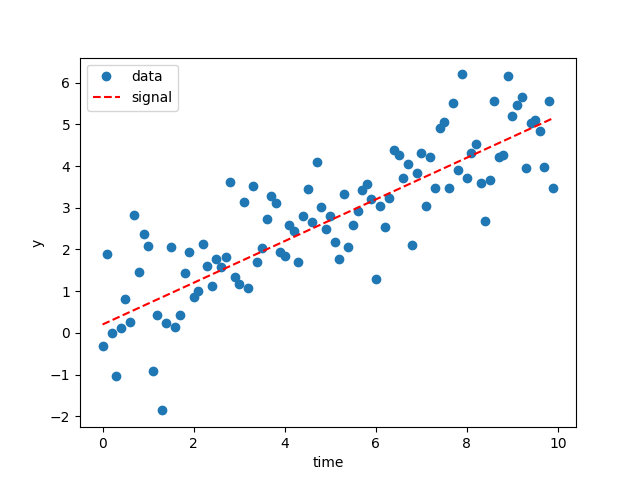

Running the script above will make a few images. Firstly, the plot of the data:

The dashed red line here shows the simulated signal.

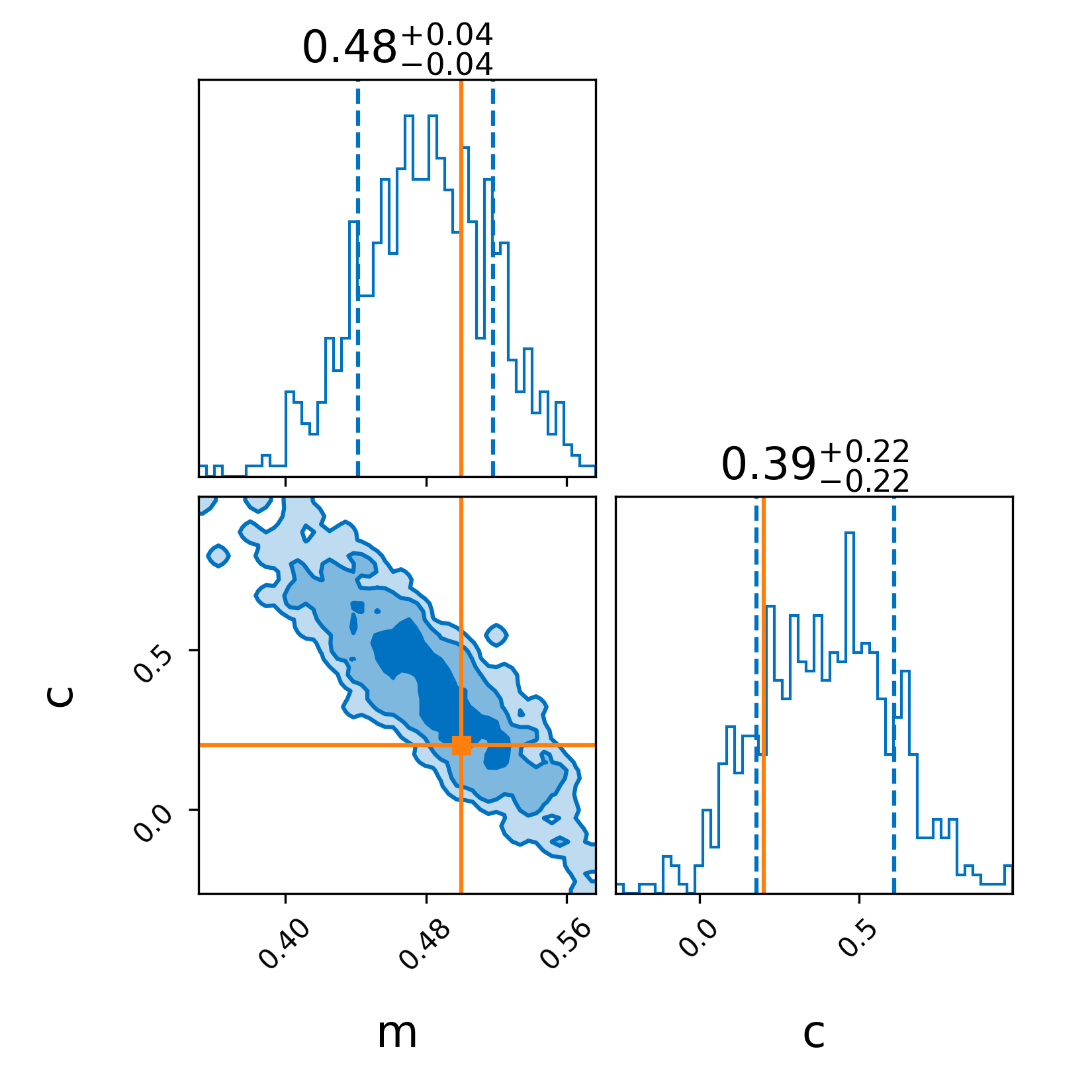

Secondly, because we used the plot=True argument in run_sampler we generate a corner plot

The solid lines indicate the simulated values which are recovered quite easily. Note, you can also make a corner plot with result.plot_corner().

Final thoughts

While this example is somewhat trivial, hopefully you can see how this script can be easily modified to perform parameter estimation for almost any time-domain data where you can model the background noise as Gaussian and write the signal model as a python function (i.e., replacing model).